TOOLS

CONTRIBUTIONS

With the rapid development of technology, AI has been integrated into our lives in many ways. Accurate machine learning and NLP make them smart. But is this trend only good for humans? How can we trust AI with our data? In this capstone group project with two IBM mentors, we aim to address AI trust issues, specifically chatbot trust. We chose Dyson's official website chatbot for a redesign and ultimately came up with a conversational AI chatbot with a focus on privacy and other fields to build more trust.

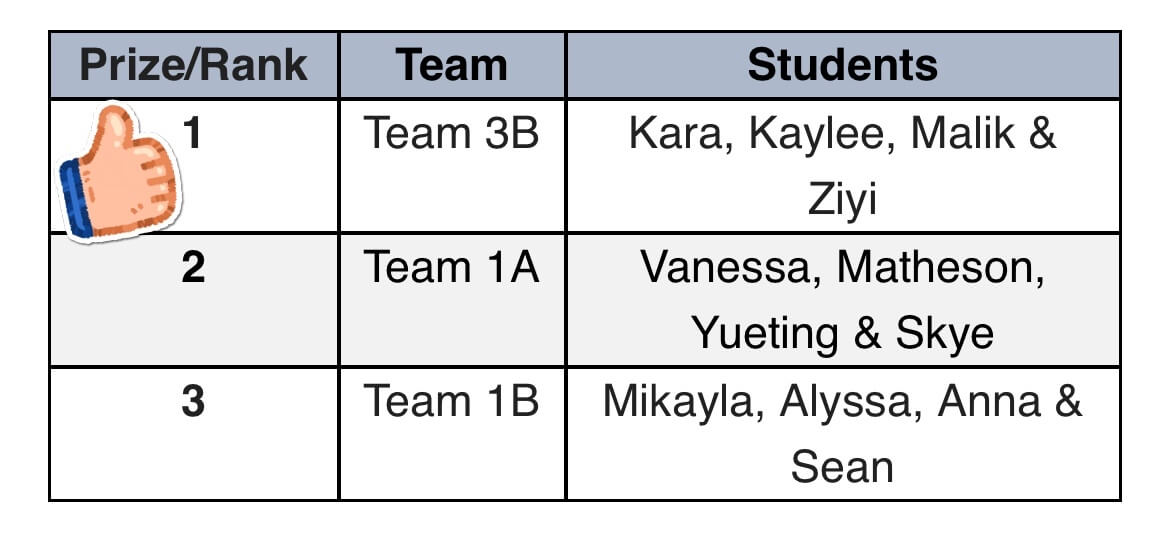

I'm super excited to share that our design solution has won first place in the MDEI program capstone project competition! I really appreciate all the hard work of my team members and support from instructor Tobias Thielen as well as IBM mentors Gord Davison and Will Ragan.

Artificial intelligence systems are rapidly starting to dominate our app and tech services. But it's important to remember that the AI is only as intelligent, fair and ethical as the data it is fed and the values of its creators.

Designers and developers of AI systems have the responsibility of understanding the impact of the systems they are building and consider that ethics of their work.

Only through transparency and ethics considerations can designers create the trust needed for users to adopt and use the systems and applications they build.

This is an intensive 14-day project aim at providing solutions to AI trustworthiness and ethics. The project focuses on solving the business prompt below with IBM mentors:

How might we help our users build trust in an AI-infused tool or application?

By analyzing the ethical problems of AI technologies and investigating various chatbots in the market, we determined our project scope to be redesigning the chatbot of Dyson's website to build user trust and AI ethics.

We started by looking into the various types of AI technologies and decided to narrow our scope to chatbots as research shows that there is a strong need of reliable chatbot services in the market.

80%

of customer interactions can be resolved by well-designed bots

60%

of customers want easier access to self-serve solutions for customer service

50%

of enterprise will spend more on bots than traditional mobile app dev by 2021

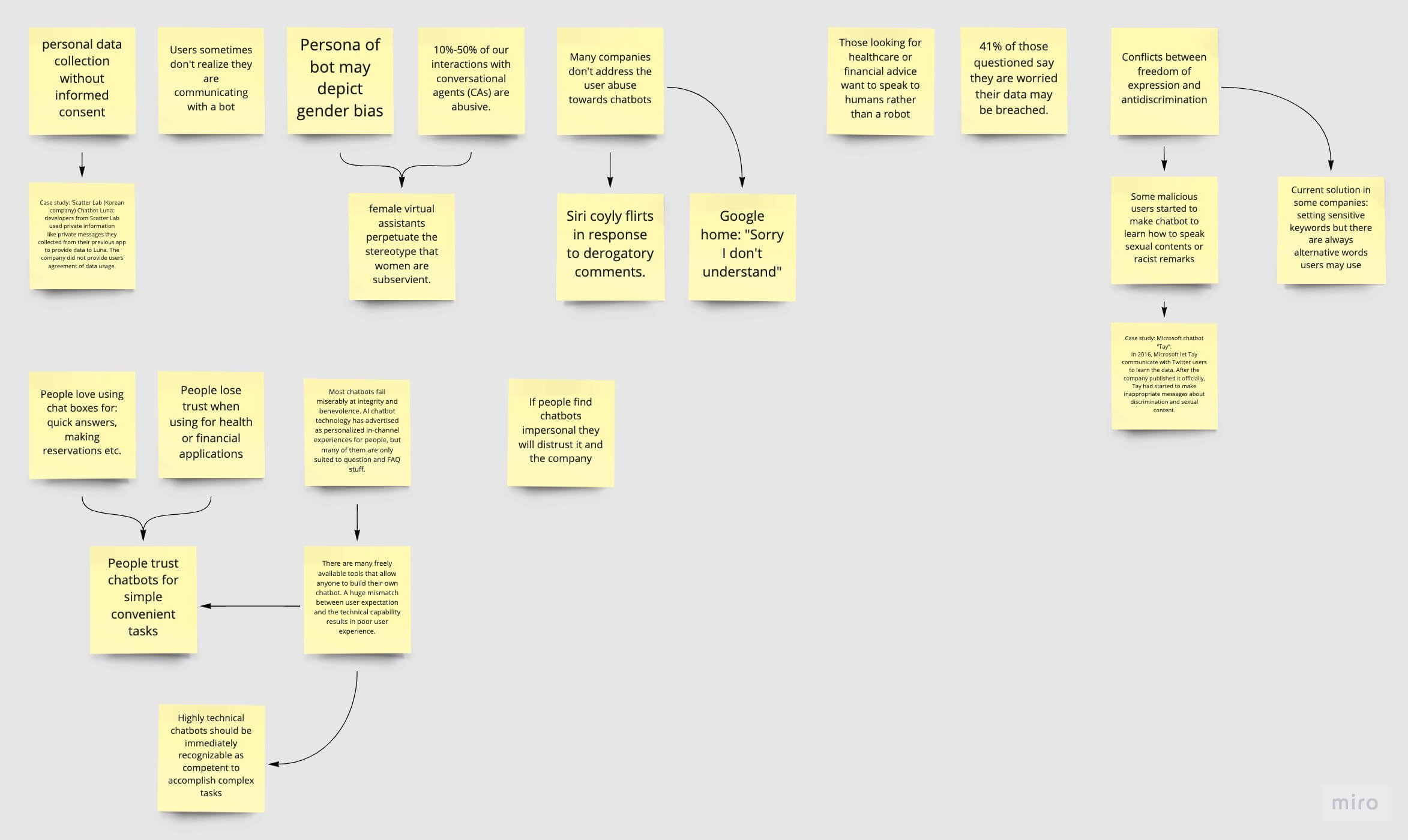

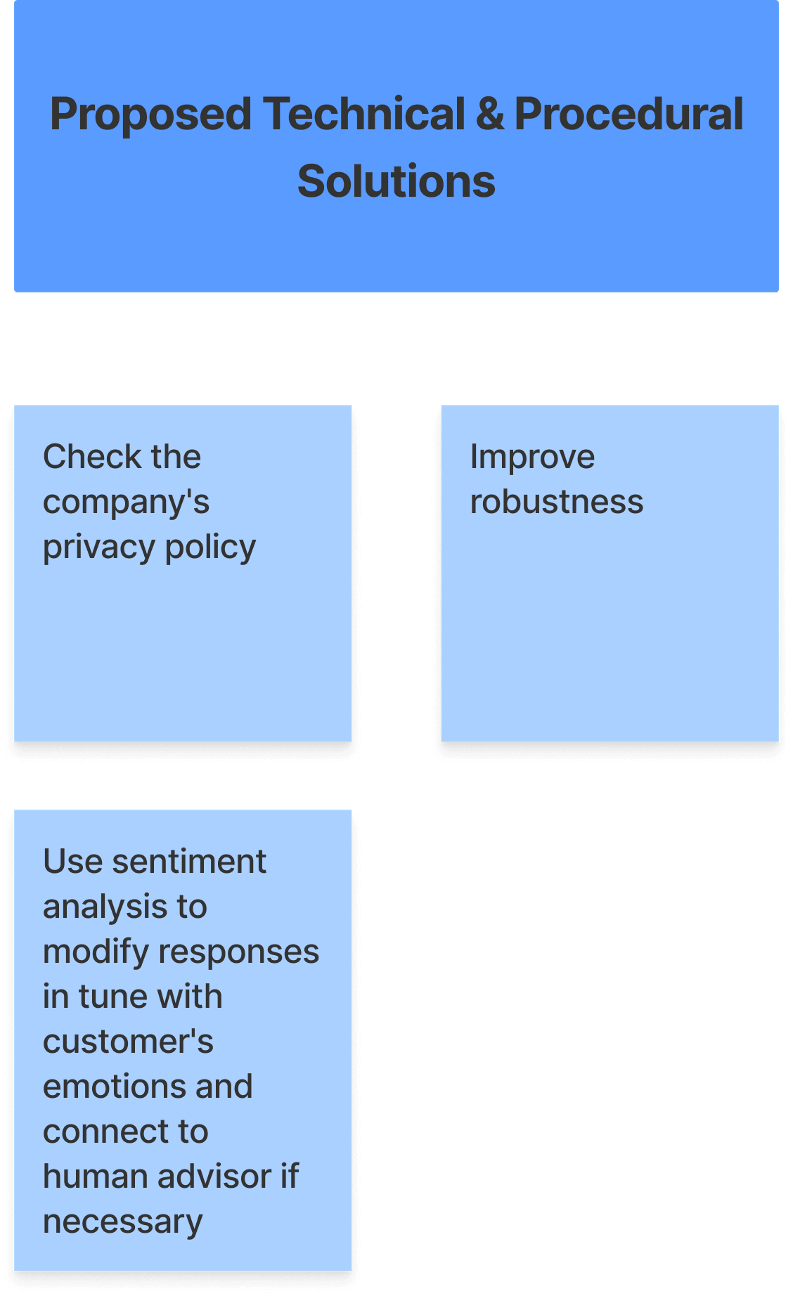

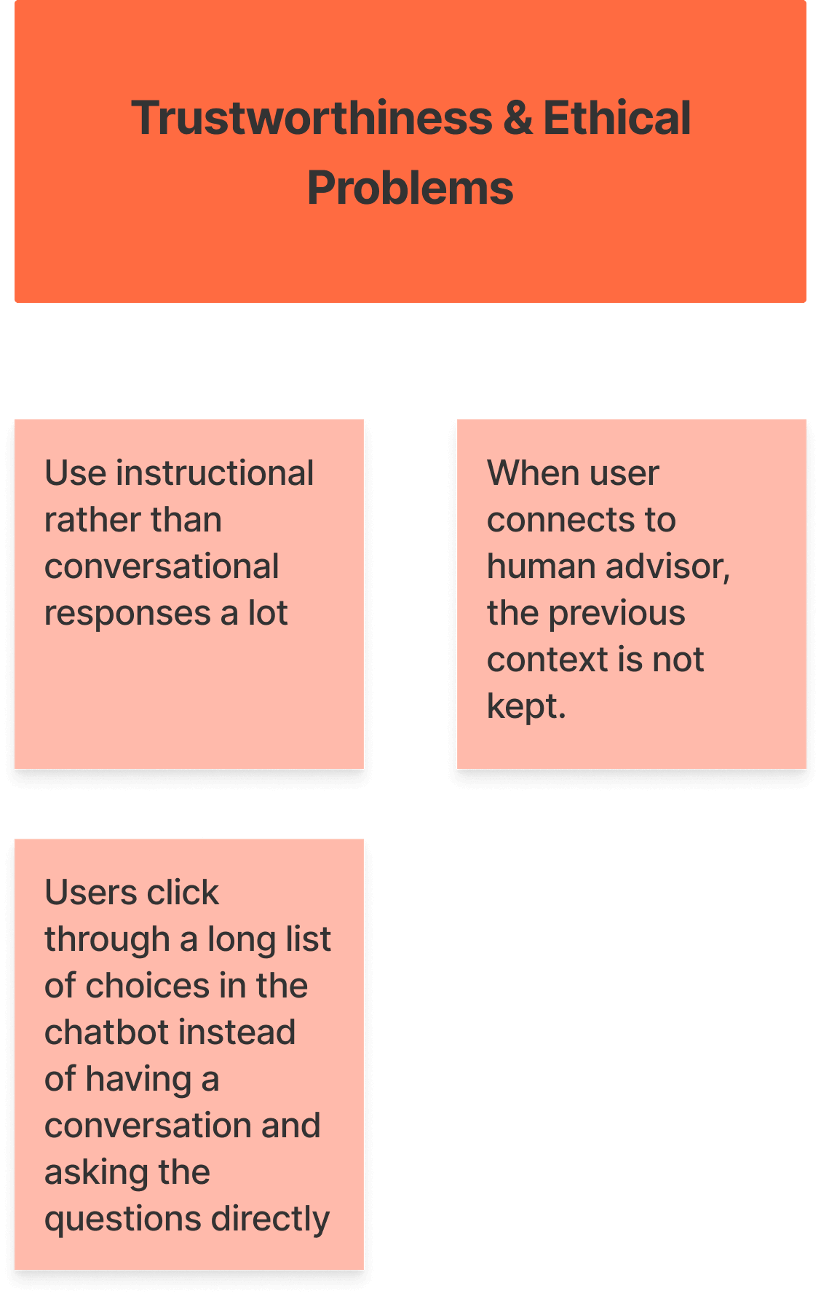

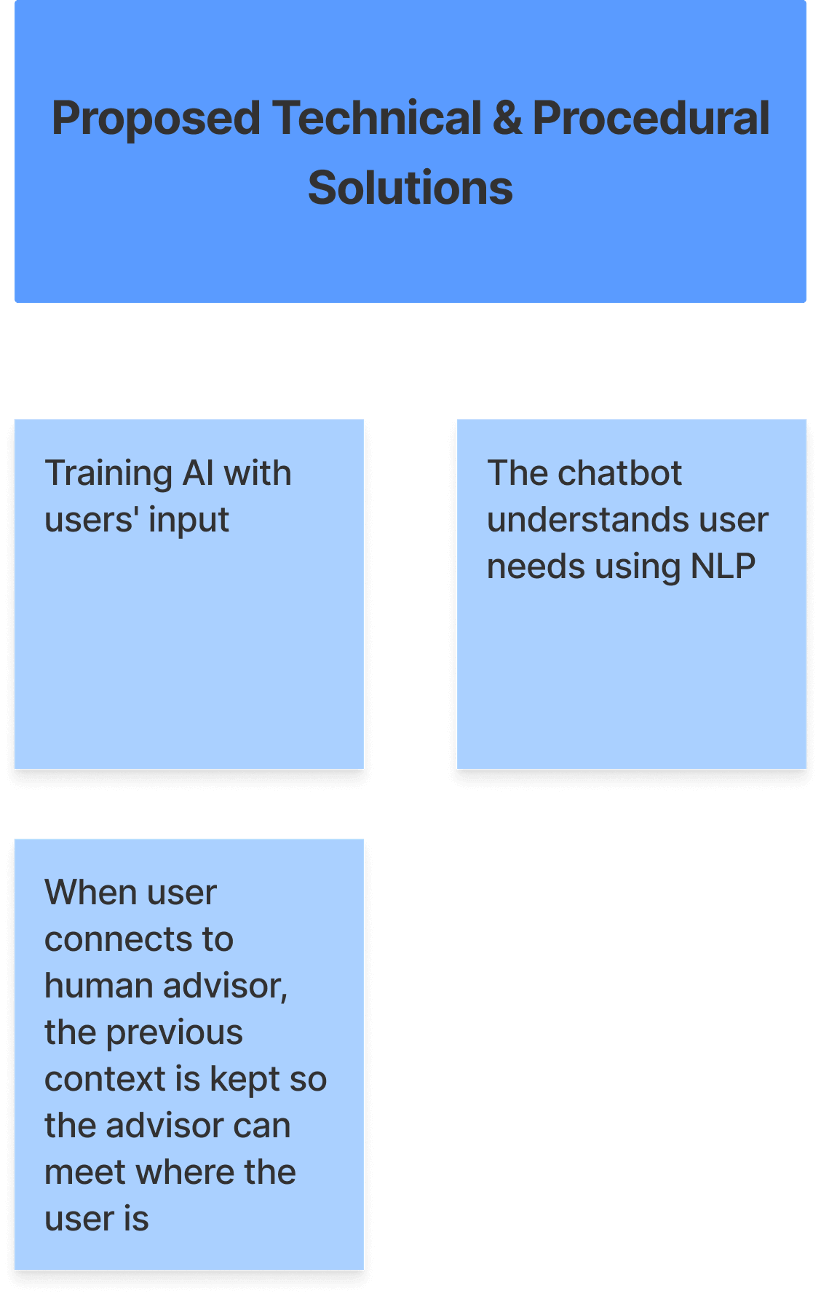

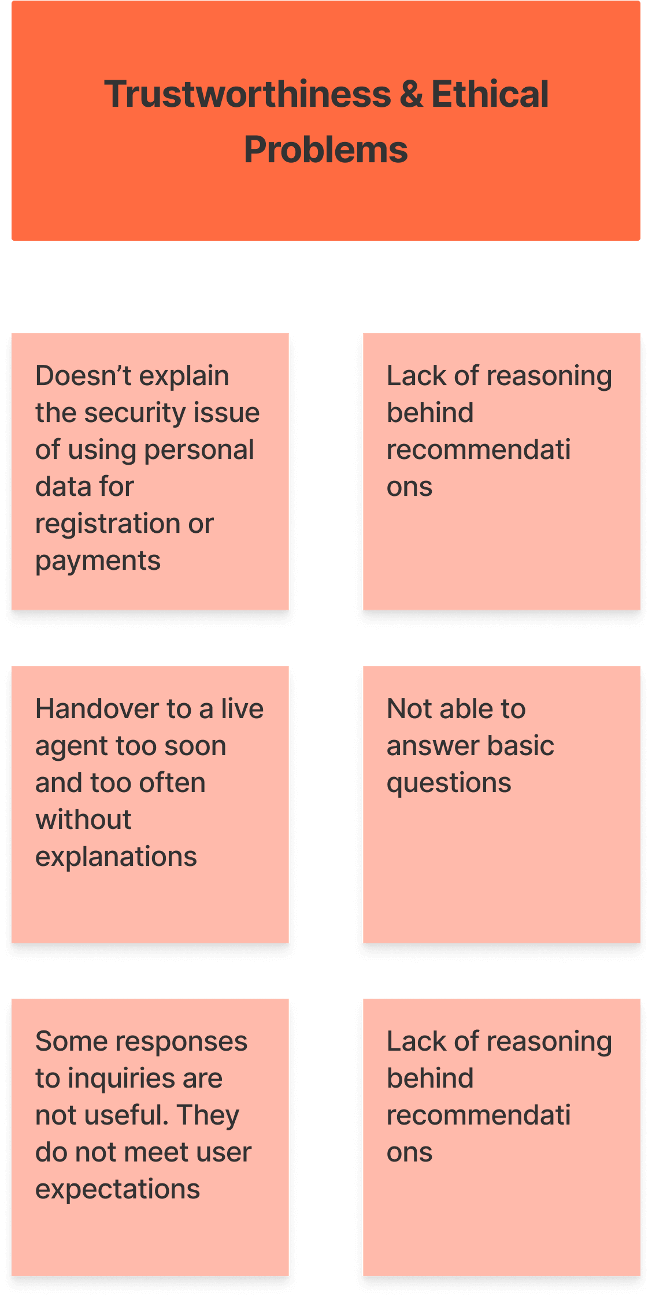

We initially identified general ethical issues for chatbots, the main issues include

We then narrowed our scope down to e-commerce chatbots and identified problems that prevent user trust in e-commerce chatbots.

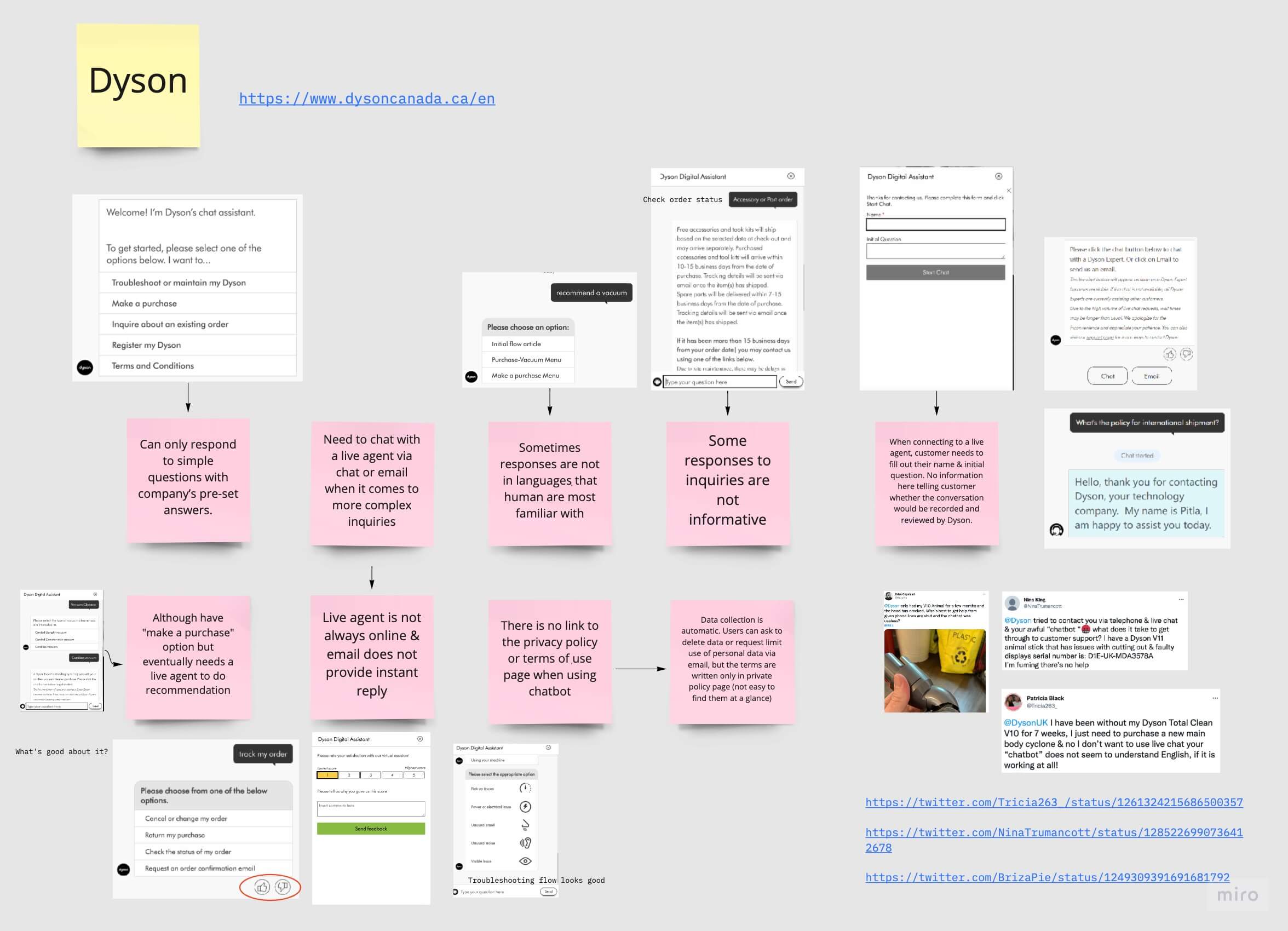

Finally, we looked at the various chatbots on the market and defined their general problems. We found Dyson's chatbot to be the most problematic as the figure shown below, so we decided to go with it.

![]()

Have to click through a long list of choices

![]()

System defaults to connecting users to a human advisor

![]()

Conversation context lost when reaching a human

![]()

Lack of easily accessible privacy policy

These pain points pointed to a singular problem which was that the chatbot isn't utilizing conversational AI.

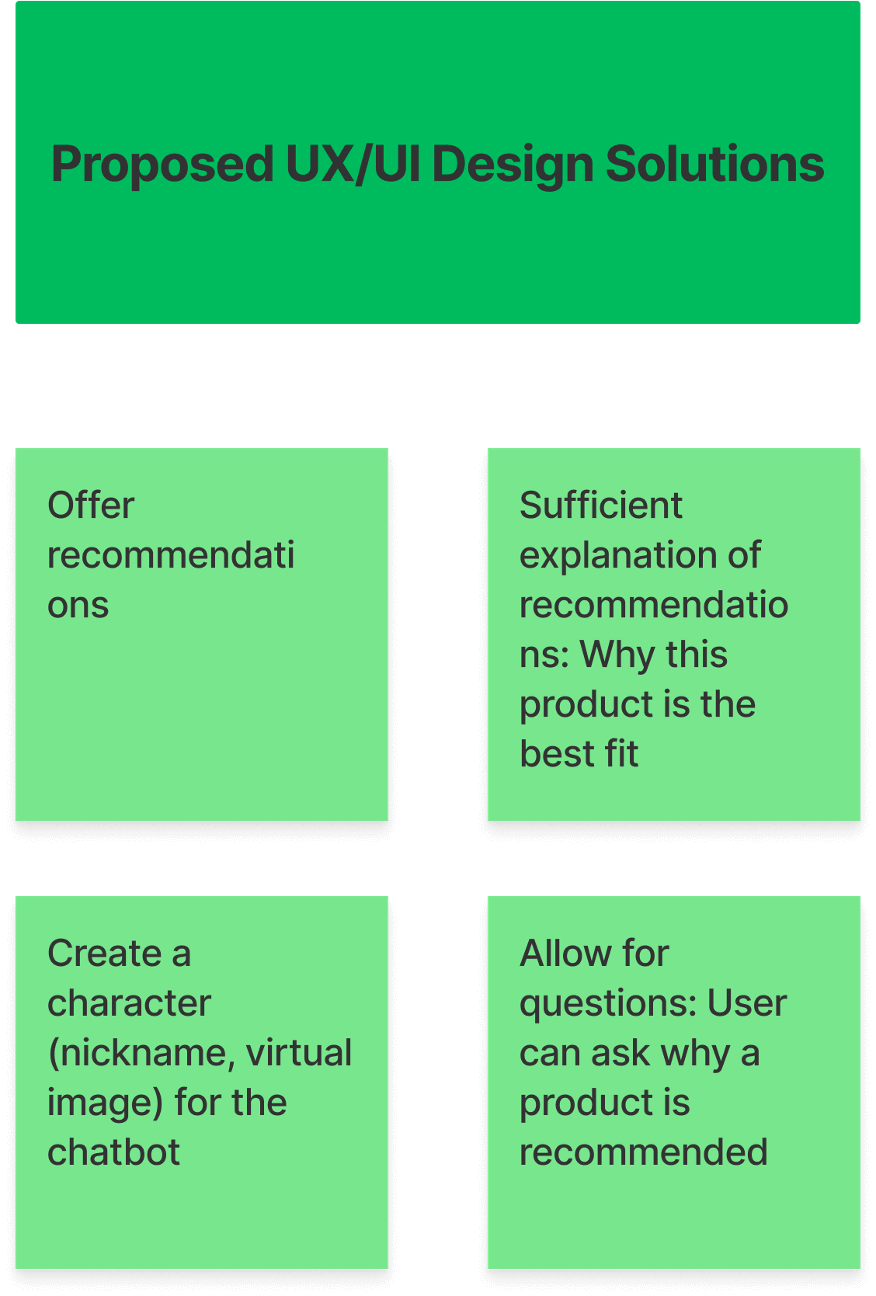

A conversational AI can better help with trust issues by supporting:

Conversational AI refers to AI tools that users can talk to. They utilize data, machine learning and natural language processing to imitate human interactions, recognizing speech and text inputs.

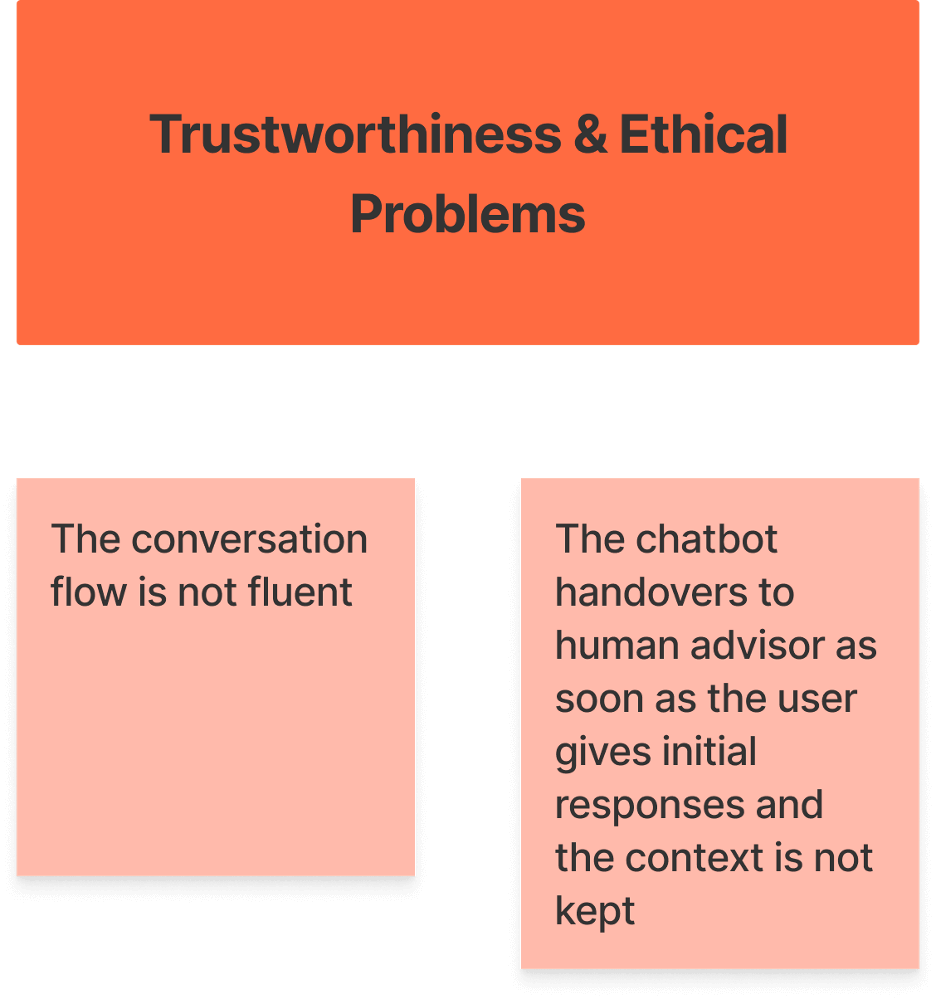

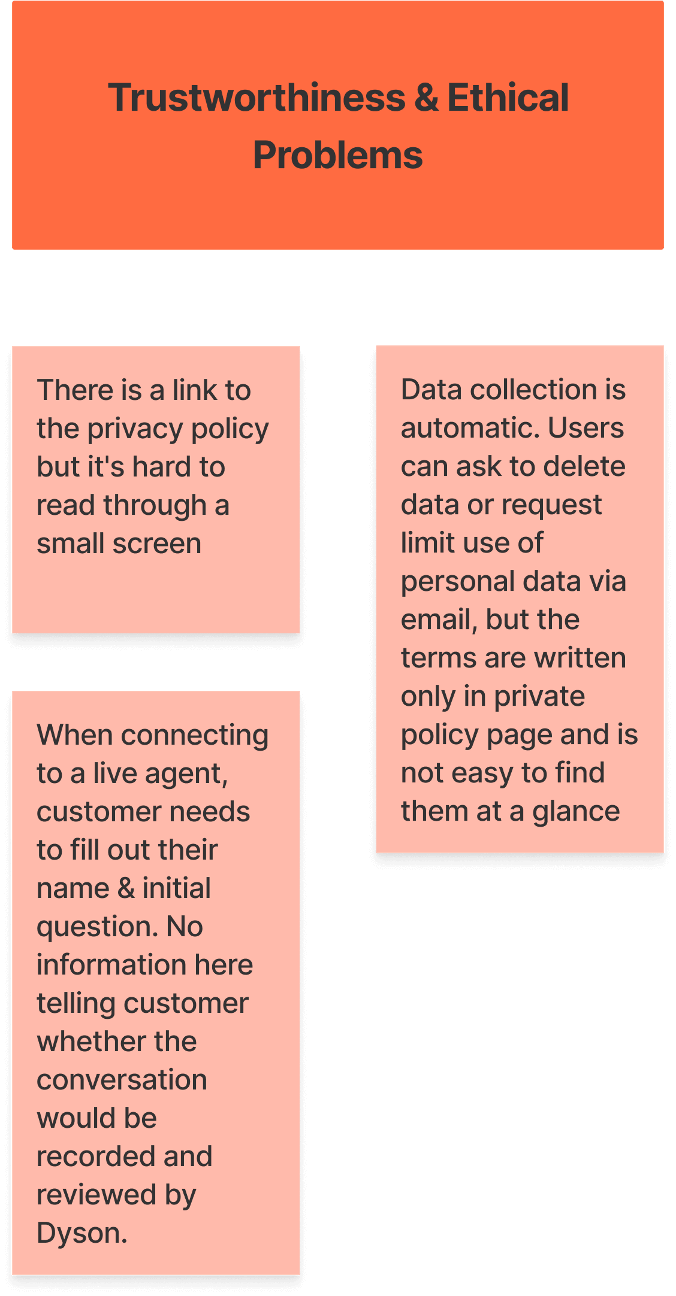

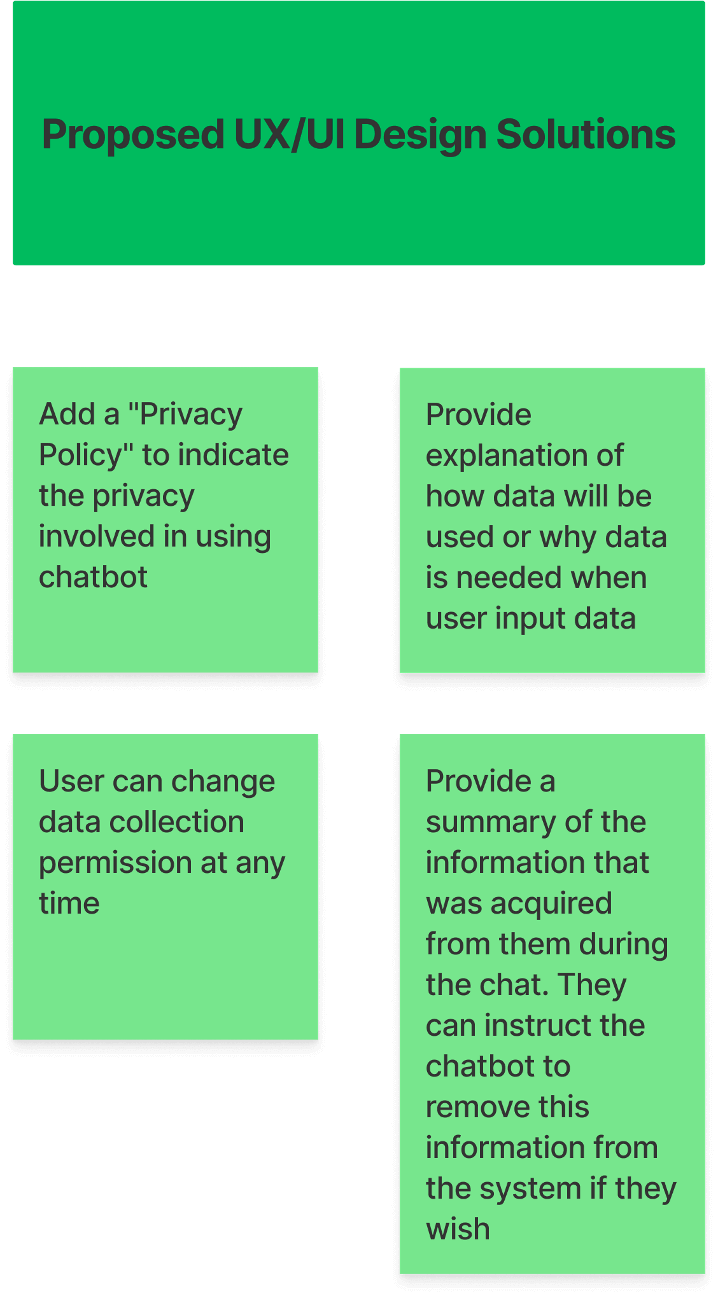

We categorized the issues into IBM's five areas of ethical focus: accountability, value alignment, explainability, fairness, and user data rights. The areas provide an intentional framework for establishing an ethical foundation for building and using AI systems.

AI designers and developers are responsible for considering AI design, development, decision processes, and outcomes

AI should be designed to align with the norms and values of your user group in mind

AI should be designed for humans to easily perceive, detect, and understand its decision process

AI must be designed to minimize bias and promote inclusive representation

AI must be designed to protect user data and preserve the user's power over access and uses

Let's meet our user, Julie, a sales director who values quality and efficiency. She wants to buy a vacuum cleaner, and Dyson is her first choice, but with so many options, it is difficult for her to know which one is best for her.

We then mapped out the user flow for purchasing with the chatbot, which helped us figure out how the chatbot would perform to meet user expectations. We initially put the entire checkout process in a chatbot.

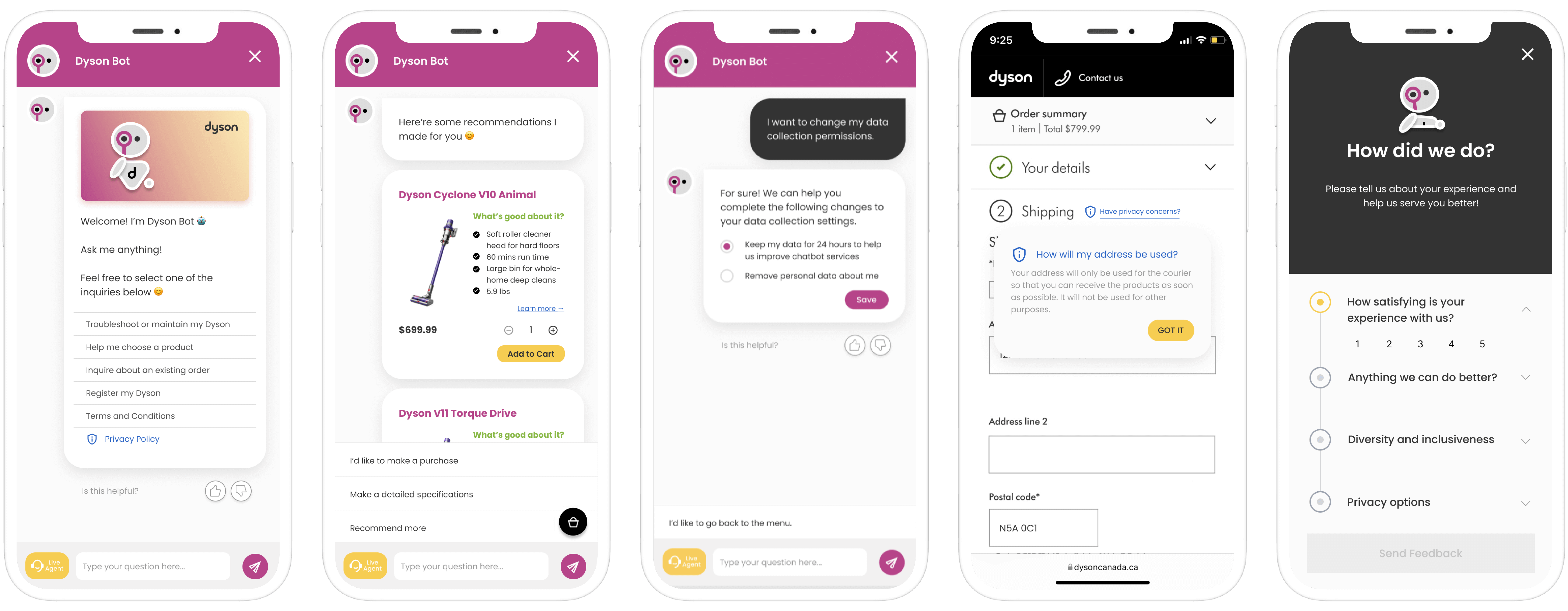

We then designd a low-fidelity prototype with five flows, including product recommendation, checkout with an existing account, checkout with a new account, privacy policy, and end-chat survey.

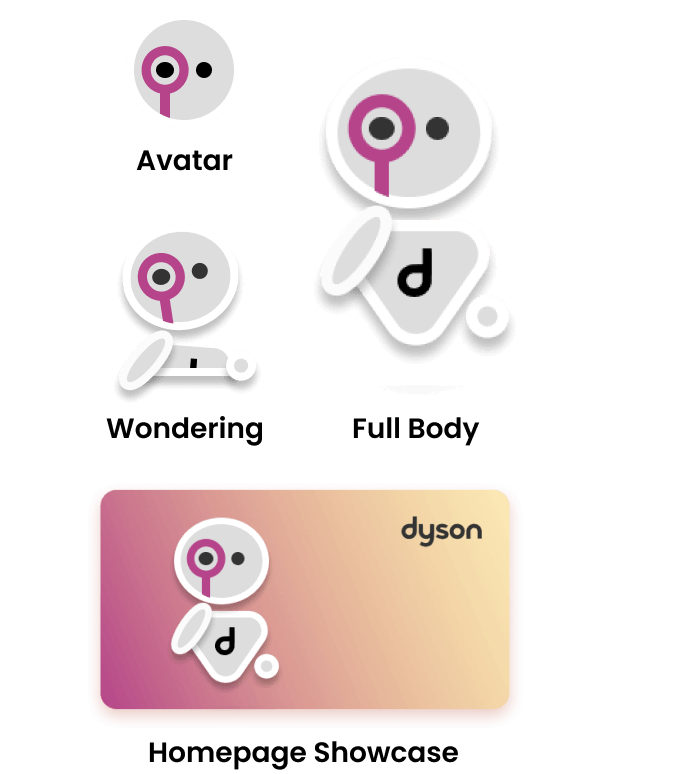

We designed a gender-neutral chatbot character named 'Dyson Bot'. The design is inspired from the image of Sir James Dyson, a billionaire entrepreneur who founded Dyson Ltd.

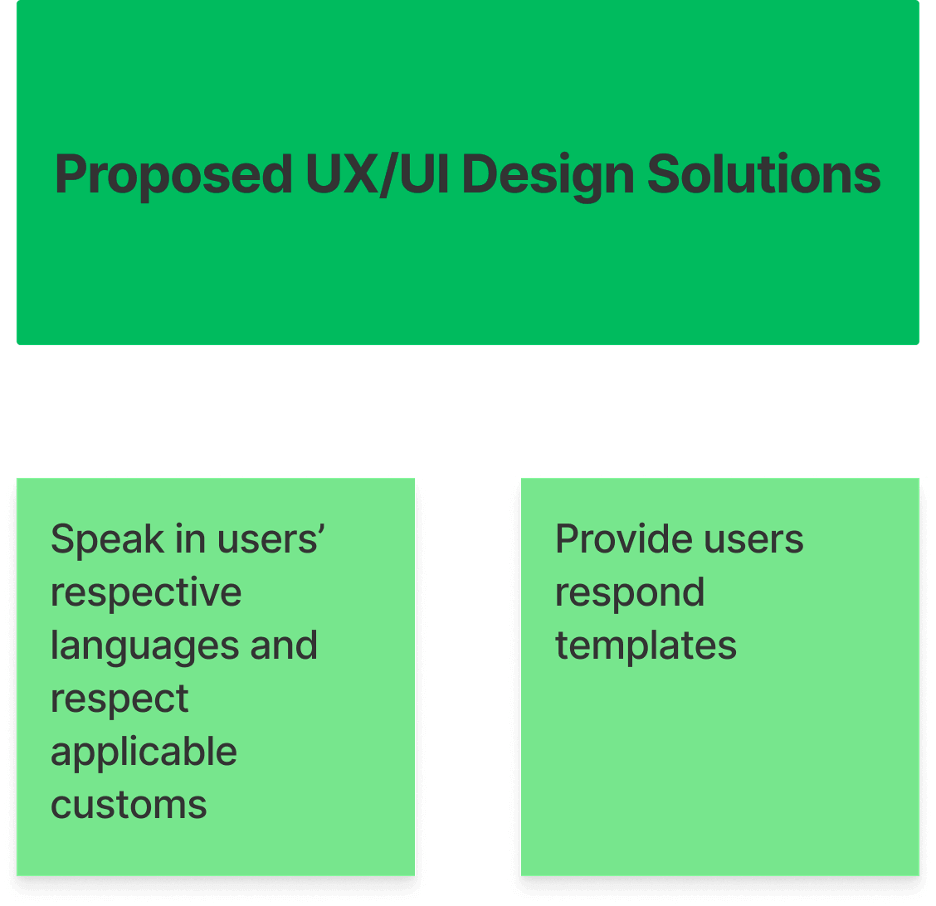

The final high-fidelity prototype consisted of five flows: product recommendation, checkout a vacuum cleaner, troubleshoot a vacuum cleaner, privacy policy, and end-chat survey.

There are eight design highlights, each of which helps address trust issues.